RIP Gene Hackman

One of the all-time greats. He gave us so much. I consider this role a summation of his gifts. This is one of my favorite scenes, in any movie, a master class in comic acting (and writing, and directing), with two marvelous performers simply playing, in thrumming joy.

Move Fast and Break Things

I’m approaching the third week of a constant headache, which began when I had a bad cold for a few days, and which has not relented though the cold is long gone. It’s not the worst headache I’ve ever had. It just hasn’t gone away in three weeks. It does not respond to any medicine. It waxes and wanes, a little, but is always present. I’ve had blood drawn and all the tests. All perfect.

Twenty-two years ago, when my wife and I were first dating, we attended an afternoon game in Oakland between the Athletics and the Chicago White Sox. We arrived a little late, found our seats. I stood up to get us a couple of beers, stepped into the aisle, and was hit in the temple by a foul ball coming hard and straight off the bat.

I was knocked out for a couple seconds. When I came to, I was lying on the stairs. A stadium worker hustled me up to the mezzanine and into a small supply room. He detained me there until I signed some papers. I remember feeling confused and, because I was on my way to get beer, did not wish to be detained any further, so I signed whatever it was, and received no copy. I am pretty sure all of this actually happened.

I bought beers, returned to my seat, where my new girlfriend, now wife, was in a panic. But I felt fine, if a little dazed. A stranger in a nearby seat congratulated me on my hard head and told me it was unfair—I should have at least received the errant ball for my trouble. No idea where that went. I said, in my opinion, I should not have had to pay for the beers.

I woke up in the middle of the night last night thinking about this incident, my headache reappearing immediately, in the way that I always have: that this long ago minor injury, which had the potential to have killed me on the spot, but had instead done nothing at all, would eventually cause my death in some long-delayed way. And that, maybe, this mysterious headache was the first sign of my last days.

I have a brain MRI scheduled for next week. But when I spoke to my doctor I joked that the headache might simply be a symptom of my Trump Derangement Syndrome. She said she was suffering from this ailment, as well, losing sleep and sanity as a provider who delivers gender-affirming care to minors, and was now swamped with new patients, after multiple area hospitals had begun denying such treatments. President Shithead hates transgender people, you see, especially teenagers, and has been using his Sharpie to abolish them in a series of malevolent Executive Orders.

But, of course, that is somehow the least of it. In the weeks since the inauguration, President Shithead has caused one of the worst constitutional crises in our history. The major news outlets have exhausted all available synonyms and are beginning to call his actions what they literally are: illegal. His corruption, cruelty, arrogance and stupidity make a stereotypical schoolyard bully look like Snuggle, the fabric softener teddy bear; and his lies would make a veteran cartel assassin blush.

His French bulldog, Elon Musk, meanwhile, has been applying his fellow definitely-normal-human tech bro, Mark Zuckerberg’s famous toddler-friendly corporate dictate, Move Fast and Break Things, to the “maximally transparent,” completely secretive work of his “Department of Government Efficiency,” where the “things” being broken are the operations of the executive branch agencies, the rule of law, and our constitutional checks and balances, and where “move fast” means “do it quickly enough so that by the time the courts intervene it’s far too late.” The “department” is actually not a real department or agency, nor does any of it have to do with efficiency, nor is Musk, according to the White House, actually working for it at all, let alone leading it.

But each day “DOGE” releases tweets, on Musk’s personal social media site, X, lying about the “waste, fraud and abuse” they claim to have found. All of it lacks context and detail, and all of it reveals they simply don’t understand anything about what they’re doing, what the agencies do, where the actual waste and inefficiencies are, and don’t give a shit. MAGA fucking loves it, though, eating it up like a dog discovering a new kind of delicious feces.

The Congressional Republicans are prostrate before their Supreme Leader, their slacks and undies bunched around their ankles, and the Democrats are tweeting furiously, and fundraising righteously. Meanwhile, federal judges are left to explain science, the Constitution, history, and democratic principles to Trump’s lawyers; as thousands and thousands of federal workers are being fired and sensitive agency data, including Americans’ personal records, are being hoovered up into Elon’s teenage lackey’s laptops.

The legitimate news outlets—you know, the “mainstream media” that everyone categorically despises—are absolutely overwhelmed by the firehose of malfeasance and chicanery, and are nonetheless doing yeoman’s work reporting on as much as they possibly can, in increasingly incredulous tones. At the same time, the blogosphere of stacks and blueskies and Xs and “independent journalism” is complaining bitterly about the total lack of news coverage of, for example, the thousands of Americans protesting in the streets on a now-weekly basis. Admittedly, these events have only been covered by the New York Times, the Washington Post, the Atlantic, ABC, NBC, CNN and CBS, Forbes, Fortune, Bloomberg, nearly all local outlets, and even Fox News. But, you know, the MSM, man—just a bunch of shills.

It’s all absolutely exhausting, although my wife and older son and I have now attended two big protests at our nearby state capitol, which have at least been entertaining and cathartic. In spite of my vague nausea. And my persistent headache.

A SCOTUS Prediction

Sometimes I make predictions that turn out to be correct, but I haven’t written them down, so I don’t have the receipts to boast about my foresight. THIS. ENDS. NOW.

(Most of them are silly obscurities. For example, I predicted, based only on the announcement that David Fincher was going to direct, and the fact that I had read, The Girl with the Dragon Tattoo, that Daniel Craig would be cast in the lead role. And I predicted that, even though he had said he would only produce, and that Guillermo del Toro had been hired to direct, The Hobbit, Peter Jackson was going to end up directing it, and that he would break it into multiple parts. See? Who cares?)

This one is about the Supreme Court.

President Dogshit Taintlick signed an executive order last week to eliminate “birthright citizenship” for babies born to undocumented immigrants and temporary visa holders. The order was, as a Reagan-appointed federal district judge said, when he stopped it from taking immediate effect, “blatantly unconstitutional.” Twenty-two states had already sued to stop the order. It shows the degree to which Taintlickism has captured the Republican Party that it wasn’t immediately fifty states.

When this reaches the Supreme Court—as it will almost certainly do relatively soon, because it’s a constitutional crisis in a can—my prediction is they will smack it down very quickly.

And, further, it will be a 9-0 decision.

I despise six of the justices on the court. But, unless the case has to do with women’s rights, state-religion-establisher Amy Boney-Carrot often joins the liberal wing, along with John “Milquetoast” Roberts. They both seem to be okay with the Constitution, as long as women and minorities are oppressed, which this order only tangentially entails. (5-4)

The rapist and the nepo-baby—Kavanacht and Goresuck—are conservative dickholes, but not so hypocritical that they will utterly abandon their own conservatism solely to stick it to the browns. They’re racists, but they’re right-wing conservative establishment racists. (7-2)

As for the lunatic fringe, we have Uncle Thomas and Gruppenführer A****. Thomas might be the only Black man in America to fervently wish the South had won. As for Torquemada de Infierno, the most far-right member of the Court in history, he would like nothing better than to deny citizenship to everyone who is not a member of Opus Dei, and put everyone else in camps until they confess their sins, flagellate themselves and hand over a pound of flesh.

But they have painted themselves into a darkly comic corner, on this one. They are fervent “originalists,” as disciples of the comparatively far-left and puppy-friendly Antonin Scalia, which means that if James Madison didn’t know about it, it still doesn’t exist. They have ruled that the only firearms we can regulate, for example, are those that existed in the 1790s. Yes, the Deep State is coming for your blunderbusses.

In this case, though, not only is the relevant Supreme Court precedent almost 130 years old, the original understanding of the text of the 14th Amendment by its authors and ratifiers 157 years ago makes this executive order unconstitutional. So, unless they want to render their hypocrisy in letters large enough for King George to read without spectacles, they’re stuck. (9-0)

The Four Types of T*ump Voters and How to Deal With Them

One of my core contradictions is that I profess to believe in empathy for all, and the importance of radical tolerance, but have a very difficult time putting this into practice. At one and the same time, I reject as dangerous the sentiment I so often see expressed by left wingers that all of T*ump’s voters must be comprehensively rejected, that friendships, romance, family connections and even tolerant coexistence are verboten; yet I am so righteously furious with all of them, that I ridicule them at every turn, disparage them as ignorant fools at best and slavering fascists at worst. I have not communicated in any form with my full MAGA aunt for years, and have little interest in ever communicating with her again, though we were never close in the first place. But my responses to her posts back when I was on Facebook likely severed that tie forever.

I struggle with this contradiction a great deal. Intellectually, I do not fault all of his voters for their support. I have long been an outspoken believer in the need to step outside of our superficial partisan divisions to find common ground from which we can work together on the major problems of our time. Climate change, to me, is the big example. We won’t be able to respond effectively if we don’t do it together, period. So far human beings are failing spectacularly in this regard, and if it continues as it has been, we will all suffer the worst of the potential consequences—and the poorest people will suffer first and most.

But, as LGBTQ people will often point out, you can’t compromise with people who want you dead. I find this to be a painfully convincing argument. Even though I have major disputes with the manner in which transgender activists have pressed their case in recent years, it is impossible to deny that theirs is an existential cause, or as close as makes no difference.

There is, however, a readily identifiable fallacy here, that I think can provide some guidance. Though our two-party system forces nearly all of us to join one of two sprawling, incoherently broad ideological camps, when we vote—unless we choose to throw our votes away on some impossible third-party candidacy or hapless protest—this bifurcation need not distort our understanding of the smaller groups within the parties.

The 2020 Nationscape survey was a massive poll—of nearly half a million American voters after the 2020 election—that resulted in a more nuanced understanding of the beliefs and values of the electorate than offered by the D/R binary. That survey resulted in six identifiable political subgroups along the left-right spectrum, three on the left and three on the right. It’s a fascinating survey, and the basis for this provocative opinion piece from the New York Times, detailing what proportional representation might look like in the United States and how we could achieve it. For the record, at this point, I don’t believe we will survive as a nation if we do not adopt this approach to our self-government.

In the spirit of that, but mostly to help me differentiate my approach toward different types of people, for the sake of my own sanity and my personal values, I present my views on the Four Types of T*ump Voters and How to Deal With Them.

Moving from left to right…

THE IGNORANT MIDDLE

Ignorant does not mean stupid, or evil, or weird. It means without knowledge. It is the normal state of human life. Here are a couple of quick facts:

Around 35% of American adults have Bachelor’s Degrees.

And according to a Fox News press release from December, the Fox News Channel “closed out December with its highest cable news share for the month in network history across all categories.” The statement—remember, they’re boasting here—goes on to explain: “Across primetime, FNC commanded 2.1 million viewers and 240,000 in the 25-54 demo accounting for 71% of the cable news audience and in total day the network secured 1.5 million viewers and 178,000 in the 25-54 demo, marking 68% of the cable news audience.”

The 2.1 million figure is the average viewership at night (“primetime”) for the month; the “total day” figure of 1.5 million viewers, as you might guess, is for the entire day, including primetime.

So, two things. One, if we take the rate of college attainment as a rough measure of “being educated,” nearly two-thirds of American adults are not educated. Two, as far as the notion that T*ump voters are “all just sitting around watching Fox News all day,” which is an oft-repeated explanation for right-wing ignorance, this is manifestly, comically false. While the Fox News Channel is not the only source for Fox News, the larger point is that voter’s media consumption patterns are much different from what we assume they are.

The voters of the “Ignorant Middle,” in fact, pay very little attention to news of any kind. They don’t read newspapers in print or online, they don’t watch TV news, and the “news” they see on the internet generally come to them via social media, bereft of context or any special authority, and which might be outright false or heavily biased, and may not come from a news organization or even a journalist, at all. Much of it is second or third hand, and then they have little time and less incentive to find out whether what they’ve seen is true in any sense. Algorithms have only one purpose in this context, which is to use whatever means available to keep people on the site, scrolling and seeing advertisements. It’s well understood that appealing to user’s emotions, particularly their outrage, is the most effective way to do this.

But why don’t they try harder to get the facts?

The group we are talking about does not have the time or the patience to sort through all of these complicated things. They are working and raising families, living paycheck to paycheck, running up huge credit bills to cover the gaps, highly vulnerable to the wild swings of the economy. High gas prices hurt their bottom line every day, as do high prices at the grocery store, usurious credit card and bank fees, and our rapacious and predatory healthcare system. In the latter category, I don’t just mean the obscene costs of healthcare, but the open-air conspiracy of pharmaceutical companies, the federal government and physicians to addict chronic pain patients to opioids (such as OxyContin and fentanyl), knowing that many of them would die. That may sound paranoid, but it’s now a matter of public record.

Also in this group are many people of color and non-white ethnicities, who have all of the above problems, as well as additional systemic burdens. It can be deadly to simply be Black in America. Immigrants, too, struggle in many ways that are often incomprehensible to native born Americans. And American Indians have the worst outcomes of any population by all measures.

Also in this group, are young people—students and newly-minted professionals—who got a degree, because they were assured it was a ticket to the middle class, who can’t find work in their field, or buy a house, or afford any of the finer things their parents had, or obtain credit. Many young people, even college graduates, are deeply ignorant when it comes to basic civics and simply have never learned how to distinguish between factual news reporting and propaganda, or even understand the traditional difference between news and opinion.

So how do we deal with this cohort of T*ump voters?

Empathy, compassion, patience, education and humility. We have failed them as educators and as highly-trained professionals, as public servants, as employers, as health care providers—in short, in every possible way. The price of eggs and gas matters. From their perspective, the government and the educated elite are useless to them unless they address the actual issues they face. And we emphatically do not.

These are basically good people. They do not vote for him because they want to hurt anyone. They vote for him because they are desperate for a change, anything that has a chance of helping them in their daily lives. This is perfectly rational, by the way. Ignorant, but rational.

THE SELFISH, CYNICAL AND IDEOLOGICAL ELITES

The political and professional class of the Republican Party. These people are wealthy or on their way to being wealthy, and vote for one reason: to protect and enrich themselves and their own class. They are the Republicans of Congress, business executives, lawyers, Wall Street managers and many, many others—don’t get me started on Big Church. They loathe Trump as a person, but they see him as a highly useful idiot who will do everything he can to make them richer and prevent everybody else in society from attaining personal wealth or even a foothold in the middle class. They are the Tom-Wolfian “Masters of the Universe,” and they control our country. They are the tech billionaires, who have destroyed our public discourse, our mental health, our political consciousness, our unity, for their own gain. They run Big Pharma, Big Ag and Big Oil. They are deliberately and with malice aforethought destroying our environment so they can get rich.

T*ump himself is nominally one of this class. His own self-loathing is readily apparent in everything he says and does. He desperately wants to be accepted by this club and their failure to accept him is the greatest wound in his life. He’s taking it out, ironically, on everyone else.

How to deal with this cohort? This is an easy one.

Absolute, undistilled, unwavering contempt. Resistance, courage, protest, democracy and art. Solidarity and refusal. No quarter given. Ever.

MAGA

But wait, aren’t they all MAGA? No. MAGA is a cult with a charismatic leader who demands perfect loyalty and capitulation. It is an intricately but poorly assembled, ugly facade of pure stupidity and lies. To be MAGA, you can be from any of these other groups, including even being a former Democrat. MAGA is not politics. It’s a new religious ideology based on a hermetic false reality. It is a cesspool, through the looking glass. An Upside Down, stranger than anything. An Orwellian desert where poor, deluded fools stumble behind a shining orange pillar of burning feces. A MAGA hat doesn’t make you MAGA. You must first remove your brain.

Avoidance, contempt, pity.

LITERAL FASCISTS

T*ump may admire fascist dictators, but he lacks the discipline and ideological coherence to be one. He is merely a self-dealing, hypocritical, racist, predatory, lying sack of shit—and an accelerant for actual fascists. I’m talking about the pathetic little boys and girls, but mostly boys, in face masks and matching jackets and jackboots, who wield swastikas and torches, and speak openly of murdering, basically, anyone, but especially every conceivable group that is not them.

Now, I believe firmly in free speech and the right to assemble. Hate speech is free speech. That is what our Constitution requires, and I believe that is what liberal democracy requires, and I will defend even the most wretched, deranged fascist’s right to speak.

However. Unlike most people, I do not automatically repudiate political violence. After all, what would the United States be without political violence? We would still have a King. Right now. The solution to this is the only thing the fascists and I can agree on.

So how do we deal with them—the second they stop merely speaking?

Blunt objects. If that doesn’t work, sharp objects. If that doesn’t work, small, supersonic chunks of metal. We crush them, with extreme prejudice.

Hope this helps! Happy 2025!

Interpreting Emilia Pérez

The Oscar nominations are finally here and it’s a largely predictable batch, with a few surprises. I don’t have a strong opinion about who should or shouldn’t win. I just always find the annual tradition entertaining in a snarky kind of way.

One surprise was that the controversial French-made, Spanish-language musical, Emilia Pérez, received 13 nominations, a record for a non-English-language film. It’s so many nominations—including for Picture, Director, Actress (both categories), Song (two nominations) and more, it’s almost as if the Academy is trolling the film’s legion of haters. The Academy is definitely not doing that. It’s not that self-aware.

I’ve written about the film in a few places, which was one of my favorites of the year. I’m returning to it, briefly, because I took note of this phrase from the nominations story in the New York Times today, which called the film “a musical exploration of trans identity.” The article was co-written by Hollywood reporter Brooks [sic] Barnes. Barnes, in my view, has always had a bit of a casual attitude toward accuracy in his reporting and writing, something I have actually complained to him about in the past, in email, to which he actually responded, to his credit. He’s hardly the only reporter on the entertainment beat who doesn’t take the topic particularly seriously, but I still find it irritating.

“A musical exploration of trans identity” is not a bad shorthand for the film, for readers who have not seen it, will not see it and have generally not followed the awards season bickering. But, while it is a musical, it is not an exploration of trans identity in any but the most superficial sense, in my opinion. And this description plays directly into the arguments happening on “Film Twitter” which is probably no longer on Twitter X anymore, right? but has maybe moved to Letterboxd and elsewhere (BlueSky? Substack? Comrade Grozny’s Workers Party Monthly Plenary Session and Borscht Pit?). Wherever that chattering cohort now resides, this is by reputation a crowd that claims to love movies, but actually loves tearing them to shreds for not being sufficiently PC woke ideologically pure much, much more. And often does not watch them at all, or turns them off at the first sign of potential divergence from their suffocating groupthink.

The root question it seems to me is one of intention. What did the writer/director, Jacques Audiard, intend? If he intended to make an authentic exploration of trans identity and the Mexican narcos, man, did he fuck up. But did he intend that?

The question of intent can be interesting, but I am the type of critic that believes that artistic intention is mostly irrelevant in discussions of a work’s merit. If intention matters at all, it ceases to do the moment the film is out of the director’s hands. Once you watch it, all that matters is interpretation. Interpretation is tricky and sometimes all we can really manage is, well, I thought it was pretty good or I didn’t like it much, and that’s okay. You’re not required to have an interesting opinion or really much of an opinion at all. I know that might be shocking to those who live online, but it turns out to be true.

But if you do wish to render an opinion, it would be nice if it took the film (the book, the painting, the sex party, the five tier cake) on its own terms. In my view, this means, that you might speculate about the artist’s intention, initially at least, just so you can get your bearings, before rendering a personal judgement that might not actually take that intention into account.

If Audiard intended Emilia Pérez to be a dramatic musical with an authentic worldview, why is the story he tells so absurdly high concept? A notorious Mexican cartel boss hires a lawyer to help him disappear so he can undergo a long-sought gender transition. She emerges as Emilia Pérez, who struggles to make up for her past sins. That is an objectively ridiculous concept—if there is any intention to present a realistic story. But maybe Audiard is an idiot, and this narrative strikes him as reasonably grounded. I don’t know; but, you see, it doesn’t matter—because in no way does the resulting film support that interpretation, no matter what the intention. I really have only one clue to his intention—I am aware that he originally conceived Emilia Pérez as an opera. That may tell me less than I think it does, however.

If he wanted the film to be an authentic tale of the transgender experience, even before you consider any of the narrative dealing with that aspect, he already blew it by telling such a crazy, unrealistic story. To me, it seems obvious that the writer/director is using transition as a melodramatic stakes-raising metaphor, exactly the same way he is using the concept of the narcos. I can understand why these uses might be offensive to some people—some transgender people may not be particularly thrilled about being anyone’s metaphor, and the victims of the cartels have perhaps suffered too much to appreciate the finer points of how those horrors can be used to raise the stakes in a melodrama. But these are relatively small criticisms. Popular narratives have taken exactly these kinds of shortcuts forever, since the invention of popular narrative.

How does it make sense to criticize a high-concept, high-camp melodrama for not being realistic? That’s like criticizing the Fast & Furious movies for not obeying the laws of physics. It doesn’t make sense, but knock yourselves out.

It breaks my heart a little, though, that the first movie to ever have a trans woman Best Actress nominee—a fact that should have everyone who isn’t a MAGA fascist rejoicing, regardless of whatever their frankly cinema-illiterate hot takes might be—is being so completely trashed by the left. The level of savage vitriol and Manichean simple-mindedness is deeply depressing.

You know what? I hope they win everything.

Fucking, Flag-Waving and Free Speech

Since high school, free speech has always been a major political issue for me. In my senior year, I helped found a literary magazine that was immediately censored by the administration before we could publish our first issue (the printer ratted us out). They objected to the use, in a couple of pieces of writing (including one of mine), of the word fuck. Admittedly, a word that no high school student would use and one that, therefore, should not appear in a student publication. As a fellow student reminded me, in the midst of the controversy, “Good writers don’t need to use bad words.”

To which I say, Fuck him. Obviously.

But we went ahead and censored the magazine because we wanted to publish it. And I say with some pride that, as far as I can tell, the magazine still exists, thirty-three years later.

That run-in with the administration was only the first round in a campaign of pushing the principal’s buttons I engaged in for the entire year after that. A skit I wrote for the drama club to perform at a pep assembly was also censored (I placed satirically sexist language in a character’s mouth), as was a poster advertising the literary magazine (the magazine was called Chautauqua; I advised students on its pronunciation on the poster by spelling it “Shit Aqua”). Another time, I wore a ball cap to school (a blank ball cap), in violation of a dress code rule that made no sense to me. I told myself that I would remove the hat if any authority figure could adequately explain the rule. None could, of course, though several tried. And believe me, the explanations those adults gave were fucking stupid.

That little stunt resulted in my being suspended for the rest of the day. My deeply amused mother had to come pick me up. She still, to this day, mocks the Vice Principal for his explanation that he doesn’t always want to wear a tie to school, but it’s just something he has to do. (I counted myself lucky that I got rounded up before my afternoon singing class. That instructor—an extraordinary choir teacher—was a terrifying little German bulldog, with the unlikely name of Merlin. I had personally seen him reduce a disobedient student, who outweighed him by about 100 pounds and was at least two heads taller than him, to a quivering wreck by doing little more than planting himself squarely in his path, bracing his compact frame for potential violence and glaring at him with Stygian fury. My hat might not have survived.)

Then, there was the final matter of a certain massive water balloon fight that happened in the cafeteria on the last day of school. I was not the organizer of this event. But it’s possible that, when caught balloon-handed by staff, instead of not throwing the balloon, I became the first person to throw a balloon, thus setting off a brief but intense battle in the eternal war of Giggling Teenager v. Hapless Adult. I was required to attend Saturday detention, under threat of not receiving my diploma at Sunday’s ceremony. Meaning, the literal paper.

To this day, I do not have a high school diploma.

Not for nothing—in fact, let’s be honest, for everything—I was highly motivated in all of this by the fascination and curiosity my proudly rebellious behavior inspired in many of the most beautiful and intelligent girls around me. I mean, priorities, amirite? I was handsomely rewarded. But I really did believe in what I was doing and, most of all, I was just done being governed by these inadequate grownups and their middle-aged compromises and failures.

Smash-cut. Thirty-some years later…

My teenage son, almost 16—God forgive me—has inherited my talent for provocation and my outsize jones for justice. He is more mature than I was, and far more calm (he is not undiagnosed, you see). But—when he finds injustice or blinkered stupidity parading before him, he, like his father, cares little for what “polite society” will think. He stands up—and he does it most of all, not for himself, but for others who can’t always stand up for themselves. I admire him very much.

Yesterday he took two full-sized flags on flagpoles with him to school. An American flag and a Progress Pride flag, which many people think of as the “trans” flag. His intention was to protest the incoming Trump administration’s depraved cruelty.

He was not allowed to have the flags with him in school and was required to leave them outside. The explanation he was given was that it was “disruptive,” the classic line. And an even better one: “If we allow you to carry these flags, we would have to allow the carrying of a Trump flag.” Yes, and? (Was anyone in the school agitating to carry a Trump flag? Would anyone in this particular school community—a public charter, where all of the district’s assorted glorious weirdos end up and where there is certainly a greater concentration of gender non-conforming kids than any other school in the district—respond to his carrying the trans flag by coming to school with a Trump flag? Is this a real concern, or a mealy-mouthed dodge?)

For the record, I think the principal of this school is a wonderful person. He’s got a tough, thankless job. He is a good and fair man. And he respects my son. But this does not mean that he didn’t stoop to the usual condescending arguments, because the truth is that schools are factories for turning out conformists, and dissent may only appear in heavily proscribed ways.

But I told my son, “that he would be likely be able to find other ways to express himself that won’t run into [the principal’s] censorship requirements.” That’s how I explained it to my wife in text. She responded by affixing a gold star to my text, meaning: Look at you, husband! You have matured!

It’s true that my views have become more nuanced. I am anti-censorship. Yet I know there are few easy answers. One more example, from recent headlines.

The Supreme Court is considering whether to uphold laws in states that have required age-verification for pornography sites. The ACLU, one of my long-time hero organizations, is against this, because of concerns that such a law will prevent adults from accessing constitutionally-protected content. The court struck down portions of laws in the 1990s that had the same aim—to protect minors from pornography. At that time, however, secure, private age-verification systems did not exist. Now they do. And so I think the court should uphold age-verification requirements.

Minors should not see hardcore pornography. They will see it, regardless, as I did when I was a teenager. But I saw it rarely, on the surreptitiously possessed VHS and secret magazine stashes of my friends. Today’s children and teenagers have instant access to infinite pornography, a great deal of which is far beyond what almost anyone would consider “normal” sexuality. Mature adults with healthy sexual relationships may be able to understand and even celebrate the variety of consensual human sexual acts, but underage virgins don’t have a chance of doing so. This should be obvious to everyone (except teenagers, perhaps—that’s why they still need adults to protect them).

Let’s be crystal clear. If anyone at all tries to use age-verification requirements to prevent minors from accessing information about sex, about birth control, about STDs, about LGBTQ issues; about pregnancy and abortion; about any kind of health issues whatsoever, physical or mental; or to prevent minors from accessing the myriad artistic works that reference or depict human sexuality in non-pornographic contexts; I am one hundred percent opposed. Will people attempt to use age-verification laws to ban non-pornographic material and health information and art? Of course they will. Will sensible people fight back? Of course they will. Because there is nuance, and because a lot of Americans are vastly illiterate fools, and because human beings are generally bad to mediocre in everything they do. But it’s okay. We’ll survive. We’ll fight back against the scolds and prudes and, in the meantime, help our children better navigate their world.

New Study Questions Trump Derangement Syndrome Diagnoses

The DSM has yet to add Trump Derangement Syndrome (TDS) to its catalog of mental illness. Perhaps this is because the definition is controversial. Originally, it was believed that TDS described a collection of symptoms related to an exaggerated, almost paranoid, concern about what Donald Trump would do when handed the most powerful job in the world. Members of his MAGA movement, and its performative right-wing enablers in the Opinion Industrial Complex, have delighted in diagnosing their political opponents with the ailment, although few of them are mental health professionals.

But, in an interesting twist, many psychiatrists now believe the syndrome has been poorly understood, until now. A new meta-study, compiling hundreds of peer-reviewed studies, strongly suggests that an entirely different cohort than originally identified may be most at risk. In short, TDS is now believed to affect Trump voters most of all—indeed, almost exclusively.

In the new study’s abstract, Dr. Bernard Francis Snikt, MD, describes the team’s findings.

Contrary to popular assumption, Trump derangement, rather than presenting as comorbid with left-leaning politics, in our research presents as statistically exclusive to those members of the American electorate who cast votes for Donald John Trump. This was initially surprising to our team, and we determined to be very cautious in drawing any inferences; however, as we delved further into the data from the 624 peer-reviewed studies we analyzed, it became difficult to support any other conclusion than that which we have reached.

The term “[name of President] Derangement Syndrome” can be traced to the psychiatrist Charles Krauthammer, who was involved in the creation of the DSM III, and was a member of the Carter administration who later became a conservative commentator. In 2003, he coined the phrase to refer to critics of the George W. Bush presidency (“Bush Derangement Syndrome”). That coinage appears to have been a lighthearted jape, at the expense of worried Democrats who feared that a President of demonstrated incuriosity, dim-wittedness and bellicose rhetoric, might involve the United States in disastrous foreign wars.

After President Bush involved the nation in disastrous foreign wars, the term appears to have been forgotten, to be resurrected more than a decade later in reference to President Trump. According to CNN commentator, Fareed Zakaria, TDS was defined as "hatred of President Trump so intense that it impairs people's judgment.” Our research demonstrates that the exact opposite is closer to the truth.

The “derangement” at the center of TDS turns out to be a result of the cognitive dissonance required for an American voter to elect, and now, reelect, an historically and uniquely unfit person to be President of the United States. Prior to Donald Trump’s first election, partisans would routinely invoke the notion of “lack of fitness” for office; however, if nothing else, the Trump era has proven that our conception of language—quaint notions like the meaning of words and their expressive value—has become inadequate.

Even the term “derangement” may no longer be an adequate description for the mental health status of voters who, against their own stated priorities in many cases, would vote to elect a man lacking most of the stated moral and ethical values of previous candidates, even if those values were mostly aspirational. Beyond personal values, Mr. Trump has demonstrated little interest in or aptitude for the basic rules of governance, scant awareness of the Constitution, and vast ignorance concerning history, law, international affairs, science, mathematics, literature, human rights, balance of power and basic human decency.

And that was all before his first term.

We can now also add to the list the fact that he is a convicted felon, an evident conman, an accused child rapist, a twice-impeached insurrectionist, and a self-dealing fraud. He is also an inveterate liar, a cheating husband, and a racist, misogynist, homophobic, transphobic petty tyrant.

What but “derangement” could possibly characterize the mindset of a voter who would send this person back to the White House? As our analysis will show, there are no words.

It is unclear whether the DSM VI, which is currently under development, will include these latest findings about TDS. It is sure to be controversial. Ironically, it may depend on how many members of the American Psychiatric Association suffer from the syndrome.

A Republic, Madam—If You Can Keep It

At high noon EST tomorrow, an incontinent 78 year-old racist, convicted felon, child rapist, conman, fraudulent, failed businessman and serial philanderer; a twice-impeached traitor, former cokehead, current speedfreak and world-class ignoramus; a textbook malignant narcissist who never read a textbook; a vicious, cruel, inhumane petty tyrant with dementia who is known to smell like a mixture of flop-sweat and fresh feces…

…will take the oath of office, an oath he previously took, then threw down on a filthy nightclub bathroom floor and anally raped, live on TikTok…

…after being elected by a bare majority of uneducated, uninformed, heavily manipulated voters, who want him to lower the price of eggs by a few cents, persecute the vanishingly small transgender community and deport brown-skinned immigrants and their citizen children; while more than 80 million eligible voters stayed home.

This, too, shall pass, because one day this epic piece of human garbage will drop dead, and it can’t happen soon enough. In the meantime, his billionaire cronies will loot the treasury, and the United States of America, as we have known it, will vanish, forever, replaced by the declining, pathetic, failing country we chose to become.

A Real Man

My son and I were just at Dick’s Sporting Goods at the mall up the road. As we left the store, a family—dad, mom, a few little kids, maybe another relative—paused in front of the door to put their winter wear back on before stepping outside. As the double doors opened, the mom said, “Oh, my God, that’s cold!”

Dad, dressed only in jeans and a tee-shirt, dismissed her, saying, “It’s not that cold.”

It was 18 degrees Fahrenheit, today’s high.

We have long lived in a world where a lot of men, regardless of specific culture or nationality, feel they must perform a version of masculinity utterly divorced from reality. A masculinity impervious to weather, to sickness, to pain, both physical or mental. We train each generation of young men to ignore the evidence of their senses, their logical minds, and their feelings. By “we” I mean every last one of us. Not just dads.

Moms, too. Every type of relative. Friends and peers. Boys and girls, and women. Smart people and stupid people. Educated and uneducated. From the far-right to the far-left and on around back to the far-right again.

It’s ingrained and thoughtless. It takes conscious work to break out of the habit. This is worth doing, because there may be no greater source of psychological pain for men than falling short of this ridiculous image of a “real man.”

Personally, I think the present moment has been particularly egregious in this respect. When I was growing up, it felt very much like these hoary old stereotypes were being broken down, that what it meant to be a man or a woman was loosening up, and it was okay to be what you were, rather than what someone told you you were supposed to be. This doesn’t feel so true anymore.

It’s what insidious and depressing about otherwise purely hilarious and campy shit like this, also seen at the mall:

It’s a little difficult to even un(ten-)pack this level of camp. Is it deliberate camp, the lesser form of camp, according to Susan Sontag? Or is it accidental camp, the purer form? Is this image, on some level, attractive to anyone or admirable or an object of wish fulfillment? Does anyone actually think this is what a “real man” would be?

So excited about the latest in a great modern splatter franchise...

The screenplay is going to be improvised this time. Just wanted to celebrate the return to office of the first president to be wholly constructed out of diarrhea and rotting garbage stretched across an armature of Russian plastic.

This Week Appears to Be Some Kind of Cruel Cosmic Joke

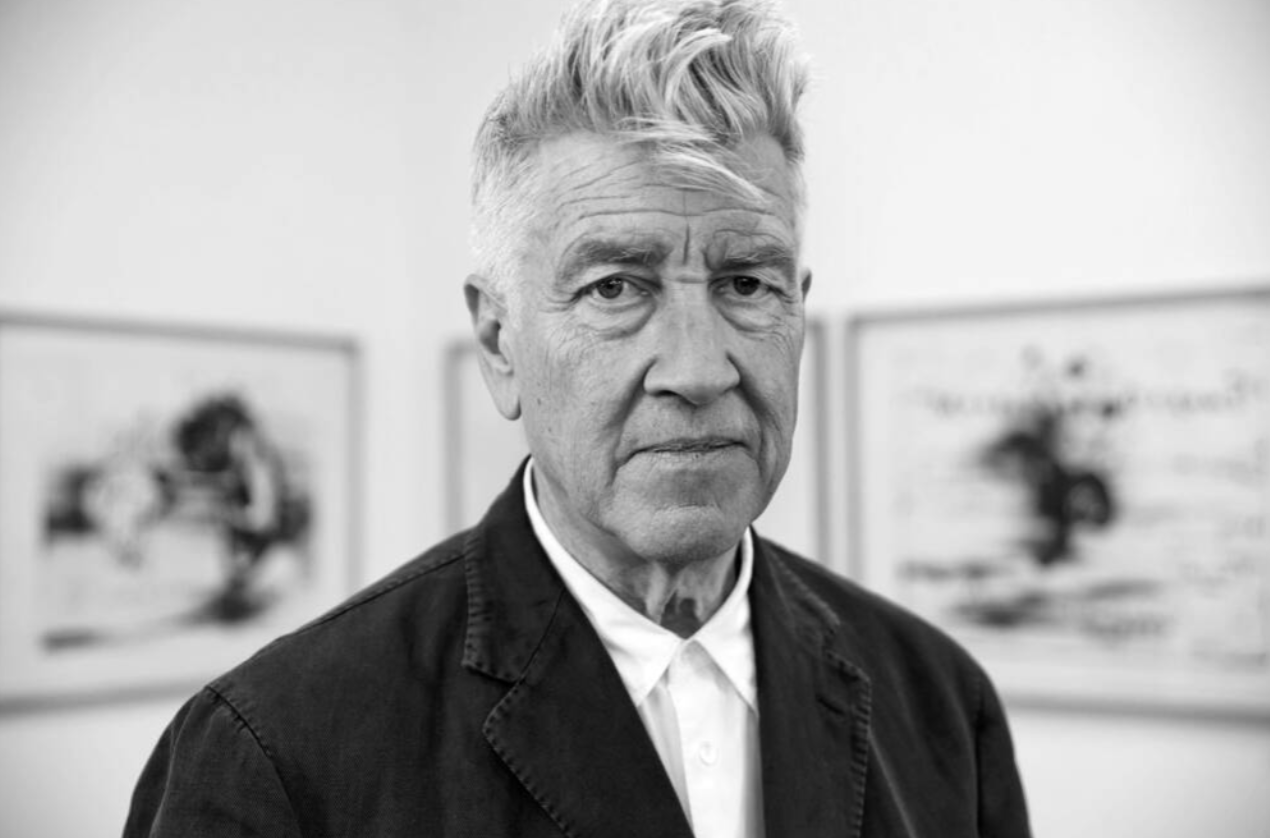

David Lynch 1946 - 2025

Pam Bondi is an Olympic Gold Medal Cunt

I deleted my latest Reddit account and deactivated my Bluesky account recently. I almost never use Instagram, but I kept my decade+ account there because it’s literally the only access I have to almost all of the people from my life. I don’t intend to use it much.

But I do need it, because there are times when I have to contact people I haven’t heard from in a while. I muted a particular person from my distant past so the site is minimally usable to me. I needed it yesterday, when I learned that one of my close childhood friends blew his brains out over the holidays. I had to reach out to a couple people to verify and commiserate.

I'm sure I will return to that subject later, because it deserves it and I am gutted and furiously angry at the world right now.

Today’s subject is, now that I no longer have any place on the internet burning shitsack to publicly vent my spleen, and receive regular dopamine hits as the dumbest people in the history of the world argue with me, I have to find a new bottomless pit to throw my worthless thoughts into.

So why not this blog? I can tappity tap on my keyboard and spew my usual self-righteous bile and at least recieve a modest dose of my favorite brain chemical, while (almost) no one has to see it, and I can spare (most of) my friends and acquaintances the random jerks online from having to reckon with what as asshole I am.

Pam Bondi is Trump’s Rapist McDiarrheapants’s latest nominee for Attorney General, after rapist Matt Gaetz dropped out. (I’m sure Blondi would rape women if she could.) I watched AT MOST two minutes of her confirmation hearing this morning and I can confirm that she is the rudest nominee I have ever seen in my life. When asked who won the 2020 election, Bondi said, “Joe Biden is President.” When the Senator asked her to actually answer the question, she sat stone-faced.

Now, lots of nominees are assholes. After all, their mere presence indicates they hope to be placed into the office for which they’ve been nominated, and you have to be at least a Level 3 Asshole to even want that. But this particular…specimen, nominated for the number one law enforcement job in the United States, can’t say Joe Biden won the 2020 election, even now. Can’t say that she would be independent of the White House. Can’t say that she won’t prosecute Trump’s Bitchass Crybaby’s political enemies. Won’t, in fact, answer any questions but, like a well media-trained chimpanzee, will only respond in political talking points.

So that’s all, that’s the post. I feel 3% better! Pam Bondi is a despicable cunt and she can go ahead get fucked forever.

Okay, now we're getting somewhere...

This little movie was made with Google’s Veo 2, an “AI” video generator.

My Sad News Today

I am using writing and outrage at Trumpworld to distract myself from the sorrow I feel today, having learned that one of my childhood best friends died by suicide over the holidays.

At some point, I will find the peace to write a remembrance. Today, I am hollowed out. Though I haven’t seen him in many years, I always hoped we would reconnect one day. Now that will never be.

What came to mind, because it’s just so fucking true, is this line, typed out on a computer screen by Stephen King surrogate Richard Dreyfuss in Stand by Me:

RIP KFMIII

Defending Pete Hegseth

The Republicans on the Senate Armed Services Committee have truly distinguished themselves today. Pete Hegseth is a far-right Christian Nationalist, a renowned drunk and serial philanderer, a prolific misogynist and sexual abuser of women, and, if confirmed, the least qualified Defense Secretary (or Secretary of War) in our nation’s history.

Those facts, along with his having been a Fox News personality, are, of course, what attracted serial rapist and TV enthusiast Donald Trump to him as a nominee.

Trump’s proxies on the committee have offered a creative defense, summed up best by the junior senator from Oklahoma, Republican Markwayne Mullin:

“How many senators have showed up drunk to vote at night? [titters from audience] Have any of you [Democrats] asked them to resign and step down from their job? And don’t tell me you haven’t seen it because I know you have. And how many senators do you know have gotten a divorce for cheating on their wives? Did you ask them to step down? No. But it’s for show. You guys make sure you make a big show and point out the hypocrisy, because the man’s made a mistake. And you want to set there and say he’s not qualified? Gimme a joke [sic]. It is so ridiculous that you guys hold yourself at this higher standard you forget you gotta big plank in your eye.

We’ve all made mistakes. I’ve made mistakes and, Jennifer, thank you for loving him through that mistake. Because the only reason I’m here and not in prison [emphasis mine] is because my wife loved me, too.” [applause and cheers]

At what point in your childhood did you learn that But all of you are also assholes is not a great rejoinder to You’re an asshole? I’m thinking I was perhaps seven or eight?

Crossing the Rubicon

Two thousand and seventy-four years ago this month, Julius Caesar led his troops across the river Rubicon. The river formed the northern border of what we might think of as Rome County, and it was illegal to bring an army into the territory. When he traitorously did so, he de facto declared war on the state, saying, according to some sources, iacta alea est.

The die is cast.

What happened next was civil war and the ascension of Julius Caesar to the position of dictator perpetuo, dictator-for-life. We all know Some of us, who are not illiterate, know how that went. But, in spite of the yeoman’s work of Brutus, Cassius and Decimus, alas, the restoration of the Republic was not to be.

Yesterday evening, the Justice Department released half of former special counsel Jack Smith’s report on the Trump indictments. While the half of the report that details the classified documents case is still on ice, thanks to [check notes] a judge appointed by Trump himself, the released half details Trump’s illegal attempts to remain in power, after losing the 2020 election to Joe Biden. Here’s what Smith had to say about that case in the report, as quoted in today’s New York Times:

The department’s view that the Constitution prohibits the continued indictment and prosecution of a president is categorical and does not turn on the gravity of the crimes charged, the strength of the government’s proof or the merits of the prosecution, which the office stands fully behind,” Mr. Smith wrote.

He continued: “Indeed, but for Mr. Trump’s election and imminent return to the presidency, the office assessed that the admissible evidence was sufficient to obtain and sustain a conviction at trial. [emphasis mine]

This is as gobsmacking a statement as has ever been made concerning an American president and the fact that it refers to an American president-elect, set to be sworn in for a second term in one week, pushes it beyond comprehension. This is by design, of course. Smith wants to shock us and shake us. But in that, he has failed.

When a leader is as corrupt as Donald Trump—a level of corruption simply never before seen in our politics—and that corruption has been almost entirely out in the open for years, our collective cognitive dissonance prevents us from even understanding what’s happening, let alone preventing it. We can never go back to our illusions that we elect reasonably qualified presidents to serve us, who sometimes miss the mark in spite of our hopes.

We have crossed the Rubicon. We have reelected a criminal president, the only one we knew to be a criminal—a convicted felon—before we elected him.

The American Empire has long been compared to the Roman, but most just in the sense of its predicted decline and collapse. But Rome didn’t fall when Caesar seized power. Nor did it fall when the conspirators assassinated him. The empire continued on for another five hundred and twenty years.

But the dream of the Republic was dead.

Nothing but Blue Sky

I recently joined Bluesky, for some reason, and I spent the morning deleting most of my posts and comments so far. Actually, the reason I joined was because I have heard that, if you hope to publish writing one day, you have to show publishers that you have a social media following. I made an account similar to the name I write under.

But I did not make a proper plan for how I would conduct myself on the site. I am very, very bad at social media. I actually despise social media. I think social media has been, on balance, an incredibly destructive force in our culture. (But really only “incredible” if you suffer from the naive delusion that most people are reasonable and not profoundly stupid.)

I’m bad at social media because I take it both too seriously and not seriously enough. I foolishly attempt to have actual conversations and arguments with people, which most people in my life seem to understand is not possible, and not even what social media is for. Then I become enraged at the casual stupidity and ignorance of almost everyone I interact with, and I tell them so.

Which means my account quickly becomes useless for the purpose I ostensibly set it up in the first place, because apparently writers are required to have one and to collect thousands of followers. So I’ve deleted every substantive post or response I made, especially the angry ones, and instead made a bunch of joke posts that featured Trump quotes from his press conference today and details about how much, and in what form, he shit his diapers as he said them. Is this the right way to do it?

I don’t know how to navigate the competing notions that I personally hate social media and want to delete my accounts and never even read the news again, let alone be constantly exposed to the gutting stupidity of my fellow humans vs. I need to build an audience if I want to write books. It’s depressing.

What I’ve realized, though, is that my low social media IQ stems from, perhaps, the biggest problem I have in my life. Namely, as a person with ADHD, I speak without thinking about 75% of the time. Now, in my fifties, I am zeroing in on this habit and trying to learn how to reduce that percentage dramatically. I can sometimes stop myself from posting a little more easily. But overall, my emotions boil up until I’m in a fog of rage, and I don’t stop myself.

So, I guess I’ll work on that. I’ve been thinking that I might need an actual, physical gag.